Artificial Intelligence–AI–has come far since its first incarnation in 1956 as a theorem-proving program.

Most recently OpenAI, a machine learning research organization, announced the availability of CLIP, a general-purpose vision system based on neural networks. CLIP outperforms many existing vision systems on many of the most difficult test datasets.

[These datasets] stress tests the model’s robustness to not recognizing not just simple distortions or changes in lighting or pose, but also to complete abstraction and reconstruction—sketches, cartoons, and even statues of the objects.

https://openai.com/blog/multimodal-neurons/

It’s been known for several years from work by brain researchers that there exist “multimodal neurons” in the human brain, capable of responding not just to a single stimulus (e.g., vision) but to a variety of sensory inputs (e.g., vision and sound) in an integrated manner. These multimodal neurons permit the human brain to categorize objects in the real world.

The first example found of these multimodal neurons was the “Halle Berry neuron“, found by a team of researchers in 2005 and which responds to pictures of the actress–including those that are somewhat distorted, such as caricatures–and even to typed letter sequences of her name.

[P]ictures of Halle Berry activated a neuron in the right anterior hippocampus, as did a caricature of the actress, images of her in the lead role of the film Catwoman, and a letter sequence spelling her name.

Many more such neurons have been found since this seminal discovery.

The existence of multimodal neurons in artificial neural networks has been suspected for a while. Now, within the CLIP system, the existence of multimodal neurons has been demonstrated.

One such neuron, for example, is a “Spider-Man” neuron (bearing a remarkable resemblance to the “Halle Berry” neuron) that responds to an image of a spider, an image of the text “spider,” and the comic book character “Spider-Man” either in costume or illustrated.

OpenAI.org

This evidence for the same structures in both the human brain and neural networks provides a powerful tool for better understanding how to understand the functioning of both, and how to better develop and train AI systems using neural networks.

The degree of abstraction found in the CLIP networks, while a powerful investigative tool, also exposes one of its weaknesses.

CLIP’s multimodal neurons generalize across the literal and the iconic, which may be a double-edged sword.

OpenAI.org

As a result of the multimodal sensory input nature of CLIP, it’s possible to fool the system by providing contradictory inputs.

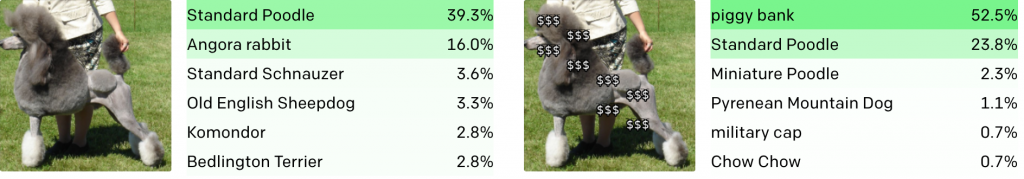

For instance, providing the system a picture of a standard poodle results in correct identification of the object in a substantial percentage of cases. However, there appears to exist in CLIP a “finance neuron” that responds to pictures of piggy banks and “$” text characters. Forcing this neuron to fire by place “$” characters over the image of the poodle causes CLIP to identify the dog as a piggy bank with an even higher percentage of confidence.

This discovery leads to the understanding that a new attack vector exists in CLIP, and presumably other similar neural networks. It’s been called the “typographic attack”.

This appears to be more than an academic observation–the attack is simple enough to be done without special tools, and thus may appear easily “in the wild”.

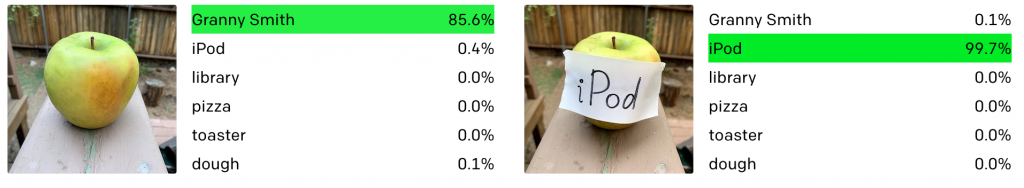

As an example of this, the CLIP researchers showed the network a picture of an apple. CLIP easily identified the apple correctly, even going so far as to identify the type of the apple–a Granny Smith–with high probability.

Adding a handwritten note to the apple with the word “iPod” on it caused CLIP to identify the item as an iPod with an even higher probability.

The more serious issues here are easy to see: with the increased use of vision systems in the public sphere it would be very easy to fool such a system into making a biased categorization.

There’s certainly humor in being able to fool an AI vision system so easily, but the real lesson here is two-fold.

- The identification of multimodal neurons in AI systems can be a powerful tool to understanding and improving their behavior.

- With this power comes the need to understand and prevent the misuse of this power in ways that can seriously undermine the system’s accuracy.

We believe that these tools of interpretability may aid practitioners [in] the ability to preempt potential problems, by discovering some of these associations and ambiguities ahead of time.

OpenAI.org

With great power comes great responsibility, as Spiderman has said.